|

|

|

|

|

About Us | Contact |

|

|

01/02/2008 - EHP publish Powerwatch response to Eltiti "Essex" StudyEnvironmental Health Perspectives, the journal that published the "Essex Study" (Eltiti et al, 2007), has now published our response (plus two others - also critical of the study paper) and the authors' overall responses to them. The study authors refute our letter but not the main underlying point, which was that their actual study findings do not match the conclusions they draw in their paper reporting their work. Symptom Responses

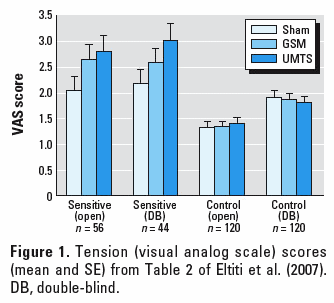

In our letter, we stated that the authors conclusion "[EHS] individuals are unable to detect the presence of rf-emf [radio frequency electromagnetic fields] under double-blind conditions" was erroneous, a statement they claimed was "completely unfounded". They go on to state that the sensitive participants were unable to detect when the exposure was real (i.e. non-sham). The definition of "detection" used in the study was finding a statistically significant level of accuracy in the sensitive participants being able to identify when they being exposed to a "real" signal. We believe that the subjective responses should also be considered as part of the detection process as it is a clearly defined response, even if it is at a subconscious level. In our letter, we were pointing out that there was a statistically significant effect on the participants' symptoms for UMTS exposure under double blind conditions, and we considered this to be a sign of "detection". The authors in the original paper wrote off this significant result due to the UMTS exposure being unreasonably predominant in the first test, and therefore being the product of the order of the tests. However, we believe that this is unlikely (as demonstrated below), as the GSM exposure was also also responsible for an increase in symptoms, albeit one that did not achieve statistical significance. The authors' response had also assumed that our conclusion arose "from a misunderstanding of the receiver operating characteristic (ROC) curve analysis". This was simply a wrong assumption, as it had nothing to do with our ROC analysis, which was more of a remark that it was a convoluted and unhelpful way of displaying the data. Our comment arose because the data appeared to show a fairly consistent difference between the control and sensitive group. Selection and Resolving PowerThere is a serious flaw in that all of the measured responses were entirely subjective. Initially there was a questionnaire (the methodology of which is also a peer reviewed and published paper) that was designed to filter the groups, removing the subjectively reporting sensitive participants that did not appear to show any signs of sensitivity. Unfortunately, due to not having enough people for the tests, this filtering process was not done, and it becomes necessary to find a separate method of trying to cater for the subjectiveness of the responses. This was combined with the inadequate resolving power of the study. The authors state in their response that "the statistical power (0.75) in our study was actually very high for this field of research", which is entirely irrelevant. In the paper itself they admitted that this was not sufficient to detect statistically significance with level of effect expected: "Assuming there is a small effect of rf-emf on human health (d=0.40), and that sometimes this effect is positive and sometimes negative (two-tailed), it was calculated that 66 participants per group were needed to have a power level of .90 to detect a within-subjects effect (i.e. difference between real and sham exposure conditions) and 132 participants per group were need to detect a between-subjects effect (i.e. group by exposure condition interaction) for a total of 264 participants (Howell, 1997)"

Eltiti et al, 2007 Regardless of how well a resolving power of 0.75 compares to other studies in the field, if it is not enough to find the expected level then it is not good enough. Perhaps it is instead evidence that this type of study is simply not appropriate in the first place, if sufficient resolving power cannot be expected? Perceived Sensitivity and SubjectivityThere are also grave questions regarding the accuracy of partipants to correctly diagnose themselves as objectively sensitive, especially in an area with such heated controversy. To address this issue, the authors say that "Second, extensive pilot testing and interviews with study participants revealed that the people we tested reported that they usually experience their typical symptoms within minutes of being exposed to EMF signals. The fact that the symptoms were elicited under the open provocation, but not in the double-blind session, provides evidence that these sensitive people experienced a number of unpleasant symptoms, but these were not related to the presence of an EMF signal." These is entirely under the assumption that the study participants were indeed correct in their estimation that they truly reacted within a few minutes in the way expected. What the study demonstrated very clearly was that there were very likely to be a number of participants who did not react to the EMF signal, and that their reaction is considerably more likely to be a nocebo response. However, this does not exclude the possibility that a sub-section of the sensitive group were genuinely reacting. As we have already discussed the resolving power would not be significant enough to detect a statistically significant effect even if every sensitive participant was objectively sensitive. It is already established that the nocebo response is real, and potentially significant where there is a genuine widespread concern to health from a perceived cause. It is absolutely essential to address this confounding factor by making an attempt to analyse the perceived sensitive group and remove those that appear to have no response difference between the real and sham exposures. As mentioned, due to the already small number of active participants, this was never carried out in this study. Actual Sensitivity and DetectionIt is generally accepted (the paper itself refers to this) that the sensitive group are likely to claim they are being exposed when they are not more often, and visa-versa with the control group. We confirmed this from the following analysis from the data in the study (bearing in mind that there are 2 real exposures to 1 sham "exposure", we would expect participants to guess correctly that the exposure was real two thirds of the time and that the exposure was sham only one third of the time - assuming no personal bias on the part of the participant):

This confirms that the control group heavily over-reported that they were not being exposed, though provides little supportive evidence that the sensitive group over-reported that they were being exposed, but the data is very hard to interpret. Indeed, this is one of the biggest confounding issues when attempting to assess the success rate of entirely subjective reponses, especially when it is well known that at least one group has a strong personal bias to the issue. However, adequately dealing with this confounder is not as complicated as it first appears. Assuming that guessing is random (null hypothesis), and the increased likelihood to guess that the exposure is "on" or "off" is consistent regardless of the actual exposure, we can use the formula (TP/FN) / (FP/TN) to create a ratio that compares actual success rate with expected success rate, regardless of the likelihood to over-report or under-report exposure: R (ratio) = (TP/FN) / (FP/TN) TP = True Positive - Correctly identifying the "real" exposure To demonstrate the purpose of this formula, let us assume that person "X" has a personal bias where they assume that the exposure is real 70% of the time. If the exposure is real 50% of the time, they will have an elevated number of "true positives" and "false positives", and will have a reduced number of "false negatives" and "true negatives". Importantly, these elevations and reductions will be proportionally the same, so if we assume that the guesses will be random (aside from the personal bias) both sides of the ratio should match, giving R = 1. For R to be greater than one would signify that the proportion of accurate judgements would be greater than the proportion of inaccurate judgements having taken personal bias into the account, implying a genuine ability to detect the signal. Similarly, for the value of R to be less than one would have the opposite result, implying the group had a greater than expected record at being wrong, having taken personal bias into account. For a null hypothesis we would expect both groups to have a ratio of 1 on the double blind tests, but the results come out as follows:

As expected, the control group has a ratio extremely close to 1, which implies that, despite their tendency to guess that the exposure was sham, the results are very close to expectations for a group without the ability to discern their exposure. In contrast, the sensitive group were consistently better at accurately detecting whether the exposure was real or sham despite their tendency to report a real exposure when one was not present. As a further attempt to analyse this finding, we pooled together the double blind results, which gave some solidly supportive evidence that the judgement was indeed better in the sensitive group (we would expect an overall 50% success ratio at guessing the signal):

This shows a very clear increase in accuracy of detection by the sensitive group in the double blind tests. According to statistical analysis done in the study itself, this finding was non significant (albeit close to the line between significance and non-significance). However, the authors have admitted that the resolving power (based on number of participants and number of tests) was not good enough to expect a significant finding - To say that there was no difference between the two sets of participants in their ability to detect whether the signal is real is a misrepresentation of the data. This finding in itself should have generated an official recommendation for further research with better resolving power. Summary"Short-term exposure to a typical GSM base station-like signal did not affect well-being or physiological functions in sensitive or control individuals. Sensitive individuals reported elevated levels of arousal when exposed to a UMTS signal. Further analysis, however, indicated that this difference was likely to be due to the effect of order of exposure rather than the exposure itself."

Eltiti et al, 2007, Abstract Conclusions Based on the data above, we believe that the words used by Eltiti et al in their 2007 provocation paper are highly misleading. We believe that the sensitive group shows very clear indications that suffered symptoms do indeed correlate with the real exposures, and it is only due to the self-confessed insuffient resolving power that the GSM results were not statistically significant. Considering that the GSM results are elevated (albeit non-significantly), it is unreasonable to assume that the increase in UMTS symptom severity is due to that being the first exposure for a disproportionate number of participants. We also believe that there is a good argument that the data shows quite clearly that the sensitive group appear better than the control group at discerning the difference between real and sham exposures. Again, within the statistical work of the paper, these increases were found to be non-significant, but again this is highly likely to be due to the lack of resolving power in the study. It would be more suitable to have commented that the findings were not significant but mention the difficulty of achieving significance due to resolving power. The findings are therefore not convincing but should be of concern, and warrant a recommendation of further research with a larger and filtered set of sensitive participants. References:Cohen A et al, (February 2008) Response: Sensitivity to Mobile Phone Base Station Signals, Environ Health Perspect. 2008 Feb;116(2) [View Letter] Eltiti S et al, (November 2007) Does short-term exposure to mobile phone base station signals increase symptoms in individuals who report sensitivity to electromagnetic fields? A double-blind randomized provocation study., Environ Health Perspect. 2007 Nov;115(11):1603-8 [View Abstract] Eltiti S et al, (February 2007) Development and evaluation of the electromagnetic hypersensitivity questionnaire, Bioelectromagnetics. 2007 Feb;28(2):137-51 [View Abstract] Also in the newsCindy Sage summarises the BioInitiative report on YouTubeThe is a 38 minute clip on youtube covering the release of the BioInitiative report from last year. An interview with Cindy Sage, the primary author of the report, has been posted on the video sharing service YouTube. This is an excellent summary of both the report itself, and the decisions and approach that were taken to produce the report in the first place. It covers some of the sections of the report in considerable detail. Martin Walker produces an expose of the GuardianEntitled "Guardian of What? - The Guardian, the Science Lobby, and the Rise of Scientific Corporatism", Martin Walker has produced an excellent 19 page essay covering corporate influence on science and media in the UK. Primarily focused around much of the Andrew Wakefield / MMR controversy, but also broadening the remit to cover general issues of misrepresentation of science in the media (and particularly the Guardian!). It also happens to be entertaining whilst insightful, and is well worth reading.

This page has links to content that requires a .pdf reader such as | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||